The Model Context Protocol (MCP) is revolutionizing how AI applications interact with external tools and data sources. As an open standard developed by Anthropic, MCP enables developers to create consistent, secure, and modular connections between AI systems and independent services.

This comprehensive guide demonstrates the design and implementation of a basic MCP-compliant semantic search server for Markdown documents. You’ll learn how to build a server that exposes indexing and searching functionality, compatible with any MCP-enabled host application.

Whether you’re building AI applications, integrating external data sources, or developing custom AI tools, this hands on tutorial will get you started with Model Context Protocol implementation.

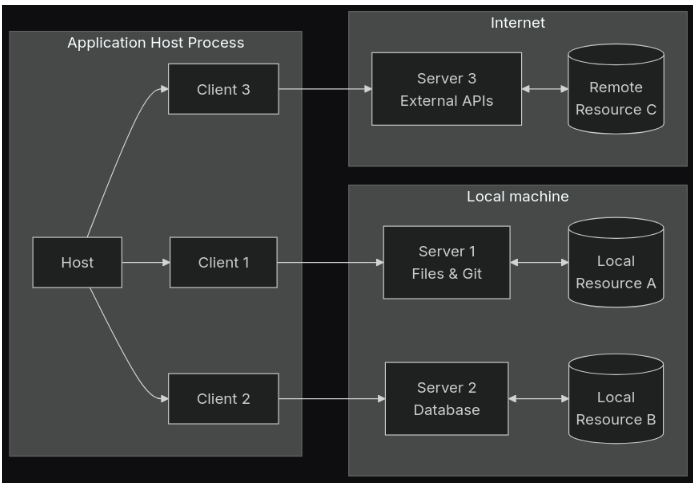

Understanding Model Context Protocol (MCP) Architecture

The Model Context Protocol revolutionizes AI application development by enabling seamless access to external tools and data through a standardized JSON-RPC architecture. Understanding MCP’s three core components is essential for effective implementation.

MCP Host: The Central Command Center

The MCP Host serves as the primary LLM-powered application (such as Cursor, Claude Desktop, or custom AI applications) and handles:

- User interaction management and context preservation across multiple client sessions

- Client lifecycle control with automated creation and management of client instances

- Permission enforcement and comprehensive user authorization frameworks

- Security policy implementation with consent management and access controls

- LLM sampling coordination and seamless integration workflows

MCP Client: The Protocol Bridge

An MCP Client functions as a dedicated protocol agent that maintains a stateful 1:1 connection with specific servers, providing:

- Bidirectional message routing between host applications and MCP servers

- Protocol feature negotiation and capability discovery mechanisms

- Subscription management and real-time notification handling

- Security boundary enforcement with comprehensive access controls

- Multi-language implementation support (Python, JavaScript, TypeScript, etc.)

MCP Server: The Capability Provider

An MCP Server operates as an independent process (local or remote) that delivers:

- Resource exposure for data access and management

- Tool functionality for executing specific functions and computations

- Prompt templates for standardized AI interactions and task automation

- Stateless operation ensuring scalability and reliability

- Focused responsibility handling with specialized capability domains

- LLM sampling requests through client-mediated communication

- Security compliance with host-driven constraint enforcement

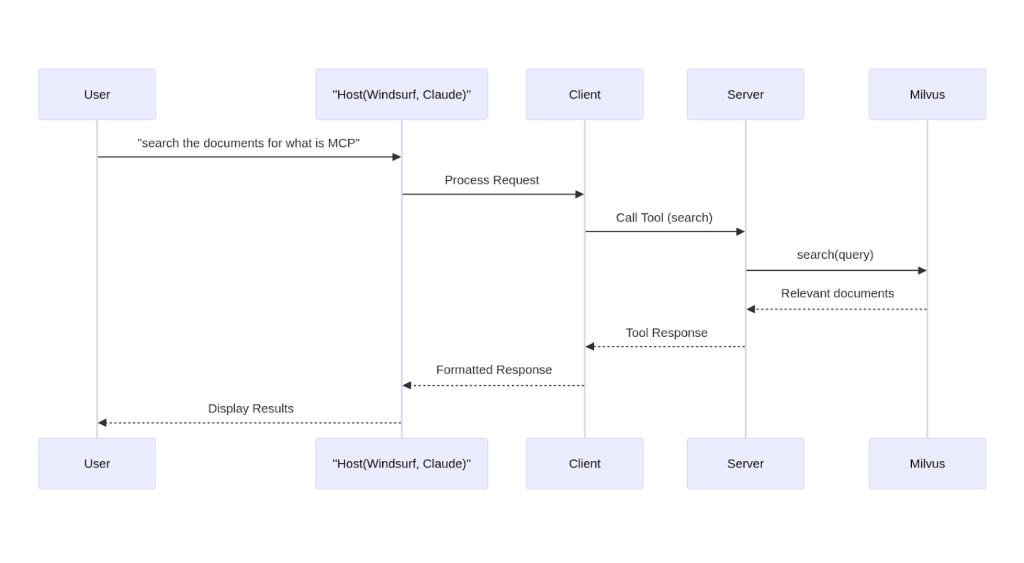

MCP Data Flow: Step by Step Process

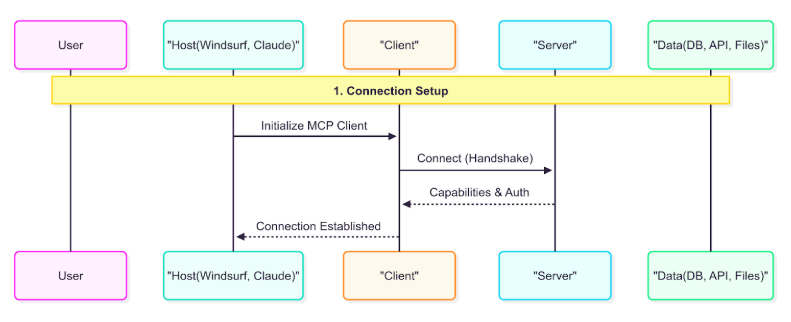

Understanding the Model Context Protocol data flow is crucial for effective implementation. The process breaks down into distinct stages:

Stage 1: Connection Initiation

The MCP initiation process establishes the foundational connection:

- Host initialization: Primary application creates and configures a new MCP client instance

- Server connection: Client establishes secure communication channel with target MCP server

- Capability discovery: Client fetches and validates server-supported capabilities and features

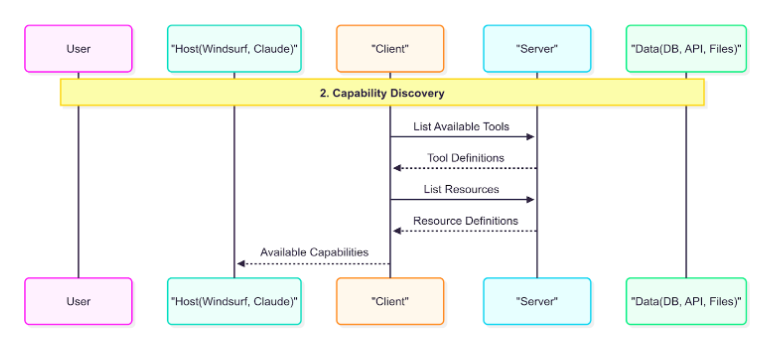

Stage 2: Capability Discovery and Registration

The capability discovery phase enables dynamic feature registration:

- Tool enumeration: Client requests comprehensive list of available tools and functions

- Resource mapping: Server responds with detailed capability definitions including names, descriptions, and parameters

- Host integration: Primary application updates its available capabilities registry for user access

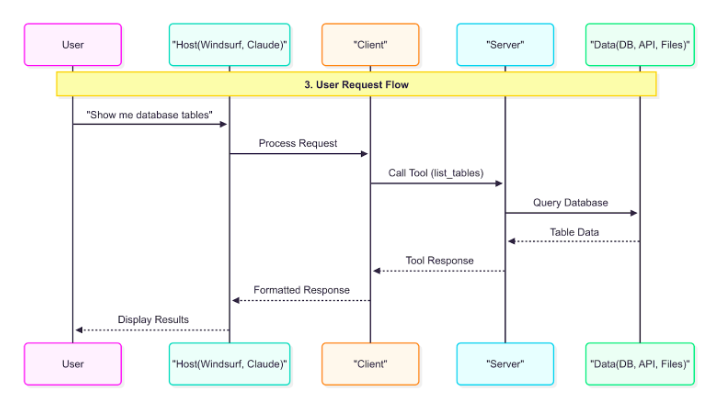

Stage 3: Active Capability Utilization

The capability utilization workflow demonstrates MCP’s practical implementation:

- User query processing: Primary application receives and analyzes user requests

- Client forwarding: Host application routes processed queries to appropriate MCP client

- LLM tool selection: Client leverages language model to determine optimal tool usage and parameters

- Server execution: MCP server processes tool calls with provided parameters and context

- Result propagation: Execution results flow back through client and LLM to host application

Model Context Protocol Benefits: Why MCP Matters

The Model Context Protocol implementation provides significant advantages for AI application development:

Modular Responsibility Separation

- Host applications focus on user interaction and experience optimization

- MCP clients handle protocol communication and message routing

- MCP servers deliver specialized capabilities and tool functionality

This architectural approach enables developers to extend LLM applications with new tools and data sources while maintaining security, efficiency, and scalability standards.

Building an MCP Server: Python Implementation Guide

This section demonstrates creating a practical MCP server implementation that supports semantic operations over Markdown documents. Our server exposes two primary tools: document indexing and semantic search functionality.

Development Environment Setup

Setting up your Model Context Protocol development environment requires these essential steps:

# Install uv package manager

curl -LsSf https://astral.sh/uv/install.sh | sh

# Create new MCP project

uv init markdown-rag

cd markdown-rag

# Create and activate virtual environment

uv venv

source .venv/bin/activate

# Add FastMCP dependency

uv add fastmcp

# Create server implementation file

touch server.py

MCP Server Initialization

Initialize your semantic search MCP server with the FastMCP framework:

# server.py

from fastmcp import FastMCP

# Initialize MCP server instance

mcp = FastMCP("markdown")

Implementing MCP Tools: Document Processing and Search

Tool 1: Document Indexing Implementation (index_documents)

The document indexing MCP tool processes Markdown files and creates searchable embeddings:

@mcp.tool()

async def index_documents(directory: Optional[str] = None):

"""

Advanced document indexing for MCP semantic search:

- Reads and processes Markdown files from specified directory

- Implements heading-based content segmentation for semantic structure

- Generates embeddings using lightweight local models

- Stores vector representations with metadata in Milvus database

"""

Key Implementation Features:

- Heading-based chunking preserves document semantic structure

- Local embedding generation ensures privacy and reduces API dependencies

- Milvus vector storage provides scalable similarity search capabilities

- Metadata preservation enables rich search result context

Tool 2: Semantic Search Implementation (do_semantic_search)

The semantic search MCP tool performs intelligent document retrieval:

@mcp.tool()

async def do_semantic_search(query: str, k: int = 7):

"""

Intelligent semantic search for MCP applications:

- Converts user queries into vector representations

- Retrieves top-k relevant document chunks from vector database

- Returns formatted, contextual content for LLM consumption

- Optimizes results for AI-powered synthesis and analysis

"""

Search Optimization Features:

- Query vectorization using consistent embedding models

- Similarity ranking with configurable result limits

- Structured responses optimized for LLM processing

- Context preservation for accurate result interpretation

MCP Server Execution Configuration

Activate your MCP server using standard input/output transport:

if __name__ == "__main__":

mcp.run(transport="stdio")

MCP Integration Workflow: End-to-End Process

The Model Context Protocol integration workflow demonstrates complete system interaction:

- User Query Submission: User submits queries through MCP-enabled host applications

- Host Processing: Primary application analyzes and forwards queries to MCP client

- LLM Tool Selection: Client sends requests to language model, including query context and available tools

- Tool Parameter Generation: LLM determines appropriate tool selection and generates required parameters

- Server Tool Execution: MCP client invokes selected tools on server with generated parameters

- Result Processing: Server executes tasks and returns structured output to client

- LLM Synthesis: Client passes tool outputs to language model for final response generation

- Response Delivery: LLM formulates comprehensive responses for user presentation

- User Interface Display: Host application presents final results through user interface

This MCP interaction pattern exemplifies clean separation of concerns, enabling enhanced reusability, testing, and maintenance capabilities.

MCP Testing and Deployment Strategies

Development Testing with MCP Inspector

For MCP server debugging and inspection, leverage the official MCP Inspector tool:

npx @modelcontextprotocol/inspector uv --directory /ABSOLUTE_PATH/TO_SERVER run server.py

The MCP Inspector provides:

- Real-time server capability discovery

- Interactive tool testing and validation

- Protocol compliance verification

- Performance monitoring and optimization

Production Integration Configuration

For MCP-compatible host integration (Windsurf, Cursor, Claude Desktop), configure server settings:

{

"mcpServers": {

"mcp-markdown-rag": {

"command": "uv",

"args": ["--directory", "/ABSOLUTE_PATH/TO_SERVER", "run", "server.py"]

}

}

}

Once configured, MCP tools appear automatically in host environments, enabling seamless LLM invocation and integration.

Complete implementation available: MCP Markdown RAG GitHub Repository

Conclusion: The Future of AI Application Development with MCP

This Model Context Protocol implementation guide demonstrates how minimal Python code, combined with MCP standards, creates modular, AI-ready semantic search systems. The protocol’s clean design and robust abstraction layers establish MCP as a viable standard for connecting LLMs with arbitrary tools, supporting both scalability requirements and local-first deployment strategies.

Key takeaways for developers:

- MCP simplifies AI integration through standardized protocols and interfaces

- Semantic search capabilities enhance AI application functionality significantly

- Local-first deployment ensures data privacy and security compliance

- Modular architecture enables scalable, maintainable AI system development

Whether you’re building custom AI applications, integrating enterprise data sources, or developing specialized AI tools, Model Context Protocol provides the foundation for secure, scalable, and efficient AI system development.

Ready to implement MCP in your projects? Start with our complete implementation guide and join the growing community of developers leveraging Model Context Protocol for next-generation AI applications.

Frequently Asked Questions

Model Context Protocol is an open-source standard developed by Anthropic that enables AI assistants to securely connect with external data sources and tools. MCP standardizes AI model access to real-time information while maintaining comprehensive security and user control over data access permissions.

MCP implementation eliminates custom integration complexity by providing universal standards for AI-system connections. This approach significantly reduces development time, improves cross-platform interoperability, and enables sophisticated AI workflows across diverse applications and platforms.

Model Context Protocol delivers critical advantages for AI application development:

Consistency: Standardizes connections between host applications and independently developed services, ensuring predictable integration patterns

Security: Provides controlled access permissions, secure authentication mechanisms, and comprehensive audit capabilities

Modularity: Offers extensible architecture allowing independent component development and integration

Flexibility: Model-agnostic design supports any LLM with tool calling capabilities

MCP servers can expose three primary capability types:

Tools: Functions enabling AI to perform actions, computations, and data manipulations

Resources: Accessible data sources providing structured information to AI systems

Prompts: Reusable, templated prompts for standardized AI interactions and workflows

MCP security implementation includes enterprise-grade features:

Controlled access permissions with granular control over AI data and tool access

Secure authentication protocols including OAuth integration and token management

Comprehensive audit capabilities for tracking AI interactions with external systems

Sandboxed execution environments ensuring safe tool call execution

Yes, Model Context Protocol features model-agnostic design. MCP works with any large language model supporting tool calling, including GPT-4, Claude, Gemini, and open-source alternatives like Llama and local models.

Companies can adopt Model Context Protocol through structured implementation phases:

Assessment Phase: Identify existing data sources and tools benefiting from AI integration

Prioritization Strategy: Begin with high-value, low-risk integrations for value demonstration

Implementation Development: Develop MCP servers for selected data sources and tools

Testing and Validation: Validate security, performance, and functionality in controlled environments

Scaling and Expansion: Gradually expand to additional systems and use cases

This incremental MCP adoption approach minimizes implementation risks while maximizing AI integration benefits across organizations.