Your startup is built on a transformative AI vision. You have the ambition, the team, and the drive to create a product that will redefine your industry. But between your brilliant concept and a market-dominating product lies the most critical technical foundation you will ever build: your AI infrastructure.

This is not merely a backend concern or a line item on a cloud bill. It is the engine of your innovation, the bedrock of your scalability, and the single greatest determinant of your technical velocity. Choosing the right AI infrastructure for startups is a decision with consequences that will ripple through every stage of your company’s lifecycle. A well-architected system acts as a powerful launchpad, propelling you from a Minimum Viable Product (MVP) to a high-performance, scalable solution. A poor choice becomes a financial drain and a technical anchor, crippling your ability to innovate, satisfy users, and outpace competitors.

Building a Scalable AI Infrastructure for Startups

For startups, the stakes are brutally high. You are in a relentless race against time, operating with finite funding and an urgent mandate to achieve product-market fit. You cannot afford to build on a platform that won’t scale with your success, nor can you burn precious capital on inefficient, oversized resources.

This guide is your comprehensive blueprint. We will cut through the marketing jargon and provide a clear, actionable roadmap to choosing and building the best AI infrastructure for startups. We will cover everything from the foundational cloud vs. on-premise debate to the nuances of MLOps, cost optimization, and building a future-proof tech stack that becomes your competitive advantage.

Why Your AI Infrastructure Is a Core Strategic Asset, Not a Cost Center

Before we dive into the “how,” we must internalize the “why.” Many founders mistakenly view infrastructure as a simple operational cost. This is a critical error. A well-designed AI infrastructure for startups is a strategic asset that delivers a powerful, defensible competitive advantage.

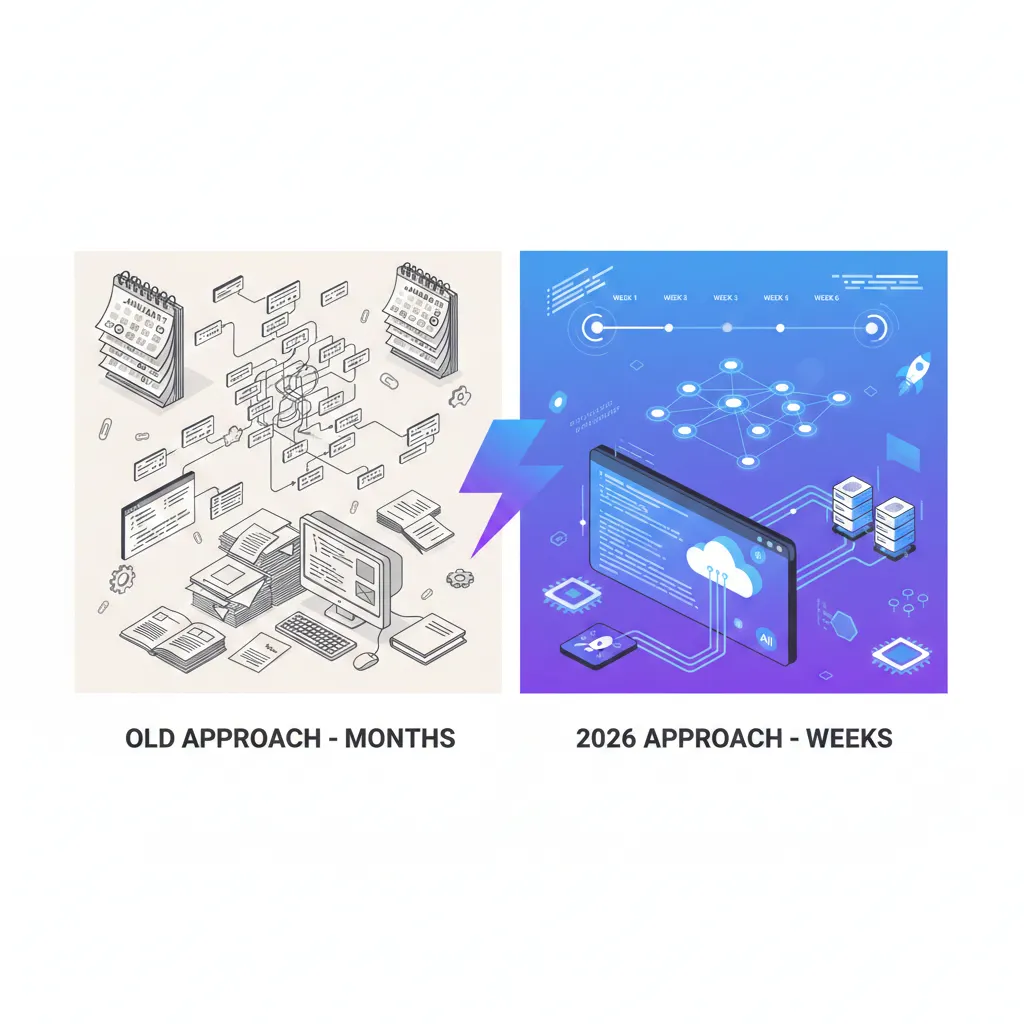

- Unmatched Velocity and Speed to Market: The right setup empowers your data scientists and engineers to experiment, iterate, and deploy models in hours, not weeks. In a world where speed is the ultimate currency, this is non-negotiable.

- Hyper-Scalability on Demand: What happens when your product is featured on a major news outlet and your user base multiplies by a factor of ten overnight? A scalable AI infrastructure seamlessly handles the surge, ensuring a flawless user experience without crashes, latency, or frantic late-night fixes. This reliability builds user trust and retention.

- Radical Capital Efficiency: For a bootstrapped or venture-backed startup, every dollar is sacred. A smart infrastructure strategy prevents you from burning cash on idle resources, maximizing your financial runway and demonstrating operational excellence to investors.

- A Magnet for Elite Talent: Top-tier AI talent is scarce, discerning, and highly sought after. They want to work with modern tools, efficient workflows, and a powerful tech stack that enables them to do their best work. A clunky, outdated infrastructure is a major red flag that will cause you to lose out on the very engineers you need to win.

Investing thought and expertise into your infrastructure today pays exponential dividends tomorrow. At Zackriya Solutions, we specialize in building these powerful yet cost-effective systems, a core component of our AI Product Engineering services.

The First Critical Decision: Choosing Your Infrastructure’s Home

The foundational choice every startup faces is where your infrastructure will physically and logically reside. While several models exist, the decision for a modern startup is simpler and clearer than ever before.

Cloud Infrastructure: The Undisputed Champion for 99.9% of Startups

For nearly every startup, going with a major cloud provider is the smartest, most efficient, and most scalable option. AI cloud providers for startups like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure have built their global platforms to solve the exact challenges you face.

- Zero Upfront Capital Expenditure (CapEx): You avoid spending hundreds of thousands of dollars on physical servers, networking gear, and data center space. Instead, you pay as you go, converting a massive upfront cost into a predictable, manageable operational expenditure (OpEx).

- Elastic, Infinite Scalability: Need 100 powerful GPUs for a massive training job tomorrow? You can provision them in minutes and then terminate them when you’re done. This is the very definition of the best AI infrastructure for scalable cloud solutions.

- A Rich Ecosystem of Managed Services: Cloud providers handle the undifferentiated heavy lifting—hardware maintenance, networking, security patches, and data center logistics—freeing up your small, precious team to focus on building your unique product, not managing commodity servers.

On-Premise and Hybrid: The Treacherous Path for Startups

- On-Premise: This means buying, housing, and managing your own physical servers. It involves massive upfront costs, high and unpredictable maintenance overhead, and is notoriously difficult and slow to scale. The only exception is for startups with extreme, non-negotiable data sovereignty or security constraints (e.g., certain government or healthcare contracts that explicitly forbid cloud usage).

- Hybrid Cloud: This approach mixes public cloud services with private, on-premise hardware. While powerful for mature enterprises optimizing specific legacy workloads, it introduces a level of complexity that is an unnecessary and dangerous distraction for most startups.

Founder’s Takeaway: Start with the cloud. Do not let anyone convince you to build an on-premise data center. Your singular focus should be on leveraging the immense power and agility of AWS, GCP, or Azure to build and scale with maximum velocity.

What’s the Most Startup-Friendly AI Infrastructure Provider?

While all major clouds are excellent, they possess different strengths, philosophies, and “sweet spots.” The most startup-friendly AI infrastructure provider is the one that best aligns with your team’s existing skills, your product’s technical requirements, and your long-term vision.

| Provider | Key Strengths for Startups | Ideal For… |

|---|---|---|

| Google Cloud (GCP) | Best-in-class, deeply integrated AI/ML services (Vertex AI is superb), superior global networking, and native leadership in Kubernetes (GKE), TensorFlow, and TPUs. Often offers very generous startup credits and a data-first culture. | Startups with a heavy focus on deep learning, large-scale data analytics, and those who want the most seamless, integrated AI development platform from data ingestion to model deployment. |

| Amazon Web Services (AWS) | The most mature and comprehensive platform with the broadest array of services for any conceivable need. Its ecosystem is vast, community support is unmatched, and AWS SageMaker provides a powerful, modular ML platform that can be customized extensively. | Teams that need maximum flexibility and choice. Ideal for startups that anticipate needing a wide variety of services (beyond just AI) and want the security of the market leader’s vast documentation, talent pool, and third-party tool support. |

| Microsoft Azure | Excellent integration with the enterprise and developer ecosystem (GitHub, VS Code, Office 365). Azure Machine Learning is known for its user-friendly, designer-first interface that caters to various skill levels, including low-code options. | Startups already invested in the Microsoft stack, those primarily targeting enterprise customers (where Azure is strong), or teams that value strong integrations and a visual approach to building ML pipelines. |

The Anatomy of a World-Class, Scalable AI Infrastructure

Once you’ve chosen a provider, you must assemble the right building blocks. The best platforms for startups needing scalable AI infrastructure are not monolithic; they are thoughtfully constructed across these three critical, interconnected layers. This is the core of our AI Infrastructure Services.

Layer 1: The Data Layer – The High-Octane Fuel for Your AI Engine

Your models are only as good as the data they consume. This layer must be robust, scalable, secure, and optimized for high-speed access.

- The Data Lake (Your Single Source of Truth): The foundation of your entire data strategy. Use a cloud object storage service like Amazon S3 or Google Cloud Storage. It is incredibly cheap, infinitely scalable, and can store all your raw and processed data—images, text, logs, videos, and more—in its native format.

- The Data Warehouse (For Structured Analytics and BI): For structured data, business intelligence, and running fast analytical queries, a cloud data warehouse is essential. Leading options include Google BigQuery, Amazon Redshift, or the cloud-agnostic Snowflake.

- Data Processing and Transformation (ETL/ELT): You need a powerful engine to transform raw data from your data lake into clean, feature-engineered formats ready for model training. Apache Spark, run through a managed service like Databricks (recommended for its collaborative features) or AWS EMR, is the undisputed industry standard for large-scale data processing.

Layer 2: The Compute Layer – The Engine for Training and Inference

This is where the heavy lifting of model training and real-time prediction happens. It needs to be both immensely powerful and elastically scalable.

- Accelerated Compute (GPUs & TPUs): Training modern deep learning models is computationally impossible without specialized hardware. All major cloud providers offer on-demand access to a fleet of powerful GPUs (like NVIDIA’s A100s or H100s) and TPUs (Google’s custom accelerators for ML).

- Containerization (Docker & Kubernetes): This is a non-negotiable best practice for modern software and AI. Docker packages your code and all its dependencies into a portable, isolated container. Kubernetes (best used via a managed service like GKE, EKS, or AKS) then orchestrates these containers, making it easy to reliably deploy, scale, and manage your applications across a cluster of machines.

- Serverless Computing: For tasks with unpredictable or “spiky” traffic, like data preprocessing pipelines or running models for real-time inference APIs, serverless platforms like AWS Lambda or Google Cloud Functions are incredibly cost-effective. You pay only for the exact compute time you use, down to the millisecond, eliminating costs for idle servers.

Layer 3: The MLOps Layer – Your AI Factory’s Assembly Line

MLOps (Machine Learning Operations) is the discipline that applies DevOps principles to the machine learning lifecycle. For a startup, a robust MLOps setup is your secret weapon for moving fast without breaking things. This is the defining characteristic of the best AI infrastructure platforms.

- Experiment Tracking: Tools like Weights & Biases, Comet ML, or the open-source MLflow are essential. They log every single experiment—your code version, data hash, hyperparameters, and resulting metrics—creating a reproducible, auditable, and searchable history of your research. This prevents “lost” work and enables deep collaboration.

- CI/CD for ML (Continuous Integration/Continuous Deployment/Continuous Training): Using tools like GitHub Actions, GitLab CI, or Jenkins, you can create automated pipelines that trigger model retraining, testing, and deployment whenever new code is pushed or, more importantly, when new data becomes available.

- Model Registry: A central, version-controlled repository to store your trained and validated model artifacts. This makes it trivial to deploy a specific model version to production or instantly roll back to a previous, stable version if issues arise.

- Model Monitoring: Your job isn’t done when the model is deployed. You must continuously monitor its performance in the real world. This involves tracking not just system metrics (latency, errors) but also model-specific metrics to detect data drift (when input data changes) and concept drift (when the relationship between inputs and outputs changes), automatically alerting you when a model needs to be retrained.

Building the Best Generative AI Infrastructure for Your Tech Startup

The rise of Large Language Models (LLMs) and diffusion models has introduced a new, more demanding class of infrastructure requirements. If you are building a generative AI infrastructure for your tech startup, you must obsess over these three areas:

- Access to Elite, Tightly-Coupled GPU Clusters: You need more than just powerful GPUs; you need large clusters of them. This means platforms offering fleets of NVIDIA H100s or equivalent accelerators connected by high-speed, low-latency interconnects (like NVIDIA’s NVLink and NVSwitch). This allows the GPUs to communicate with each other as a single, massive supercomputer, which is essential for large-model training.

- Mastery of Distributed Training Frameworks: Training or even fine-tuning a model with billions of parameters requires specialized software libraries like DeepSpeed (from Microsoft), PyTorch FSDP (Fully Sharded Data Parallel), or JAX. These frameworks handle the complex task of sharding the model’s weights, gradients, and optimizer states across hundreds of GPUs.

- A Fanatical Focus on Inference Optimization: Deploying these giant models cost-effectively is a monumental challenge. A single inference call can be slow and expensive. Your infrastructure must support advanced techniques like quantization (reducing model precision from 32-bit to 8-bit or 4-bit), distillation (training a smaller, faster model to mimic the large one), and specialized, high-throughput inference servers like NVIDIA Triton Inference Server or vLLM.

What Are the Most Cost-Effective ML Platforms and Tools for Startups?

Beyond the major clouds, a rich ecosystem of tools can dramatically reduce your costs and accelerate your development time. The most cost-effective ML platforms for startups are often not single, monolithic platforms, but a smart, layered combination of the following:

- Hugging Face: Absolutely indispensable for any startup working with NLP, audio, or computer vision. Using their thousands of open-source, pre-trained models as a starting point for fine-tuning can save you hundreds of thousands, if not millions, of dollars in compute costs compared to training from scratch.

- Google Colab / Kaggle Notebooks: These platforms offer free GPU and TPU access for prototyping, collaboration, and experimentation. They are the perfect sandbox for your data science team to explore and validate new ideas with zero initial investment.

- Databricks: Provides a unified, collaborative platform for data engineering and machine learning on top of your data lake. By simplifying complex data pipelines and optimizing Spark jobs, it can significantly reduce infrastructure management overhead and total cost of ownership.

- Open-Source Foundations: Leveraging foundational libraries like PyTorch, TensorFlow, Scikit-learn, XGBoost, and Pandas is completely free and gives you direct access to the cutting edge of AI research. A deep understanding of these tools is essential.

Where to Find Cost-Effective AI Hardware and Compute for Startups

While the cloud is your primary environment, how you consume cloud compute is the single most critical factor in managing your burn rate. If you’re looking for cost-effective AI hardware for startups, these are your most powerful strategies:

- Become a Master of Cloud Spot Instances: This is the #1, non-negotiable cost-saving strategy for AI startups. Use Spot Instances (AWS), Spot VMs (GCP), or Preemptible VMs for all fault-tolerant workloads, especially model training. You can achieve up to a 90% discount on on-demand prices. Your MLOps pipeline must be architected to handle interruptions gracefully by checkpointing and resuming training.

- Explore Specialized GPU Cloud Providers: For very long, uninterrupted training runs where the cost of interruption is high, niche providers like Lambda Labs, CoreWeave, or Paperspace can sometimes offer better raw price-performance on GPU rentals than the major clouds. They are an excellent option to have in your toolkit for specific jobs.

- Implement Ruthless, Automated Shutdowns: The cheapest hardware is the hardware that is turned off. An idle NVIDIA A100 GPU can cost over $3 per hour. Never pay for it to do nothing. Create automated scripts and policies to shut down expensive GPU instances and entire development/staging environments during nights and weekends.

- Practice Continuous Right-Sizing: Constantly monitor your resource utilization using cloud-native tools. Don’t use a massive 8-GPU instance when a single GPU will do. Don’t provision a huge Spark cluster for a small data job. Continuously analyzing and adjusting your instance types to match the workload is key to a lean infrastructure.

By meticulously combining a major cloud provider with the aggressive use of spot instances, best-in-class open-source tools, and ruthless automation, you can build a world-class AI infrastructure for your startup that is both immensely powerful and incredibly cost-efficient. This isn’t just about saving money; it’s about building a sustainable, scalable foundation that sets you up to win.

For a deeper dive into how these principles are applied in the real world, explore our Case Studies, where we break down how we’ve built scalable AI platforms for clients across various industries.

Ready to design and build a robust, cost-effective AI infrastructure for your startup? Contact us

Frequently Asked Questions

There’s no single “best” one; they are all excellent.

GCP is often praised for its strengths in data analytics (BigQuery) and Kubernetes (GKE), and it’s the home of TPUs.

AWS has the largest market share and the most extensive suite of services, with a very mature AI/ML ecosystem in SageMaker.

Azure is a strong contender, especially for startups that are already in the Microsoft ecosystem. The best choice often depends on your team’s existing expertise.

This varies wildly. A very early-stage startup might spend a few hundred dollars a month. A startup actively training large models could spend tens of thousands. The key is to start small, monitor your costs obsessively, and only scale your spending as your product and revenue grow.

Probably not. In the beginning, your data scientists or ML engineers can handle the basics. However, as soon as you have a model in production and are iterating frequently, hiring an MLOps specialist or working with a consultancy like Zackriya can provide a massive ROI by accelerating your development cycle.

Building sophisticated Agentic AI systems requires a highly reliable and low-latency infrastructure. You’ll need robust API endpoints for your models, real-time data pipelines, and scalable compute for the agents’ “thinking” processes. The principles in this guide—containerization, MLOps, and scalability—are even more critical for agentic AI.